Recent report suggests that Israel may be using AI to conduct airstrikes on Palestine. This raises several questions on how AI should be used in warfare and military operations, as Israel becomes the first country to use AI in selecting targets

There is an adage that says all is fair in war. We’re certain that whenever that adage was used for the first time, John Lyly, the British poet to whom the line is often attributed to, couldn’t have imagined the very concept of AI. But is it really fair when a computer plays out a set of instructions against humans, especially when noncombatant civilians are concerned? Well, that’s when things start getting tricky.

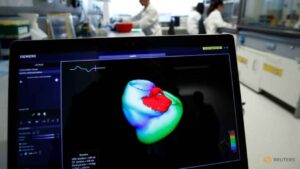

According to reports, Israel’s military is utilizing artificial intelligence (AI) to expedite the identification of targets for airstrikes and handle logistical planning for subsequent operations in the occupied Palestinian territories, amidst increasing tensions.

Using AI For Target-Selection

Bloomberg reported that Israel’s AI-based targeting system can quickly process large volumes of data to prioritize and assign thousands of targets for both manned aircraft and drones, as stated by Israeli military officials. Additionally, the Israel Defense Forces (IDF) utilizes another AI program called Fire Factory, which assists in organizing wartime logistics, including calculating ammunition loads and proposing schedules for each strike.

Related Articles

This revelation follows an escalation in airstrikes by West Jerusalem on the occupied West Bank in recent weeks, characterized as a “focused counterterrorism operation.” A May 8 airstrike on the coastal Palestinian territory of Gaza resulted in the death of at least 15 individuals. Furthermore, the IDF has continued to target locations in Syria, further escalating tensions with its arch-enemy, Iran.

According to Bloomberg, the AI systems employed by the IDF still rely on human operators to verify and authorize each airstrike and raid plan. While the AI technology has significantly reduced the time required for processing, an IDF colonel mentioned that human verification takes a few extra minutes. The use of AI allows the military to achieve more with the same number of personnel.

What about AI hallucinations and errors?

However, experts have expressed concerns regarding the potential consequences of AI errors and the possibility of diminishing human involvement in decision-making as the technology progresses.

Tal Mimran, a law professor and former military adviser at the Hebrew University of Jerusalem, raised questions about accountability if an AI miscalculation occurs and the AI’s decision-making process remains inexplicable. He emphasized the potential for devastating mistakes that could harm entire families.

In summary, although the IDF’s AI systems streamline the targeting and planning process, experts warn about the risks associated with AI errors and the potential for reducing human oversight in the future.

The Ethics Of War and AI

No matter which AI expert you speak to, the general consensus among scientists and AI developers is that AI has the potential to wipe out human civilisation. That is one of the many reasons why some countries have had conversations about using AI in warfare.

Back in February, the Netherlands and South Korea hosted a summit calling for the responsible use of AI in warfare military operations. As of today, global leaders have been hesitant in agreeing to any restrictions on its usage out of concern that doing so could put them at a disadvantage.

The US too is very keen on getting countries, especially its allies to accept their doctrine of using AI in warfare. As per the US doctrine, AI’s usage in warfare and military operations can and should be limited to using AI to cover nuclear weapons safety, responsible system design, people training, and auditing techniques for military AI capabilities, and for financial auditing.

Both, the US doctrine, and the multi-nation summit hosted by the Netherlands and South Korea pointed out that AI should not be used to determine targets and more importantly to get AI to execute and eliminate targets.

Interestingly, the US Air Force successfully tested their first fighter jet fitted with an AI pilot, just days before publishing that doctrine.

Certain reports have suggested, that Israel’s next weapon in its AI arsenal is a swarm of drones, powered and controlled by AI. Last week Israeli defence contractor Elbit Systems demonstrated its Legion-X operating system for autonomous drone swarms.