Customer experience is pivotal to the growth of a company. While there are several contact centres that engage with customers regularly, there’s hardly any quality monitoring that takes place. To address this pertinent issue, Observe.ai was founded in 2017 to empower call centre agents with real-time feedback on customer sentiment, and to help them take the best action during a call. The company leverages AWS to share insights and suggestions, based on the call.

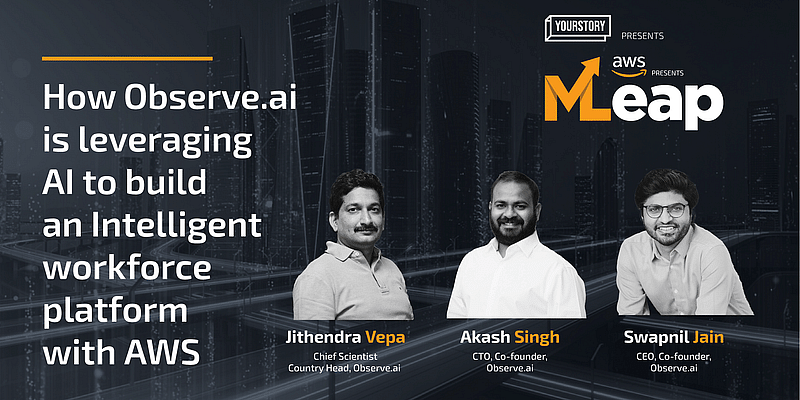

Founded by Swapnil Jain and Akash Singh, Observe.ai has a workforce of 250 employees and 150+ customers. The duo along with Jithendra Vepa, Chief Scientist – Country Head, Observe.ai, talk about their company’s vision and mission, the impact they are driving in their domain, and how their collaboration with AWS has been fruitful for their growth.

The mission

Observe.ai, through its cutting-edge technology, wants to make every employee in the contact center AI-empowered and ultra-productive, helping them continuously improve to drive 10x better business outcomes.

“We want to become a one-stop shop for enterprises to run their support functions. This includes but is not limited to driving operational efficiency, boosting customer experience and retention, fuelling revenue growth, as well as improving compliance, and reducing risk,” shared Akash Singh, Co-founder and CTO, Observe.ai.

As a company, they want to be a “force multiplier” for their customers by delivering value to every stakeholder in customer support centres, which includes agents, QAs, supervisors as well as business executives.

“We offer deep quality monitoring and coaching workflows powered by our state-of-the-art conversational intelligence platform. We have built an automated way of doing the process, which was earlier a manual one,” he said, adding that they allow our customers to get actionable insights and analytics on various channels like audio calls, chat, email, SMS, etc.

Key milestones

The startup has witnessed 2.5x consecutive growth in the last two years, with 120 percent retention. The statistics are proof enough — Observe.ai processes above 50 million calls and over 500 million inferences monthly. Their platform is built on AWS, and they leverage a lot of capabilities from them, including AWS Lambda, AWS Fargate, as well as Amazon SageMaker.

“We offer the above capabilities built on the foundation of conversational intelligence and are enterprise ready, along with a suite of enterprise readiness capabilities like SSO, administrative features like RBAC, reporting APIs, and compliances like PCI, SOC2, and ISO,” added Akash.

Addressing the problem statement

Speaking about one of the use cases that aid QA representatives, where AI/ML is a core offering, Jithendra highlighted how these professionals have to otherwise go through call interactions manually and rate the agents in different aspects. Next, they share this feedback with the agents’ supervisors, which is a very tedious process as they have to go through each and every call manually.

“We have automated this process so that the QA team can evaluate 100 percent calls of an agent and provide more objective feedback to their supervisors. This can improve their performance and requires extracting intents and entities from unstructured data, like calls, and chat conversations,” he shared.

One of the biggest challenges with call interactions is to get the highest possible transcription accuracies, so that users can seamlessly search through transcriptions and perform the downstream tasks accurately.

Collaboration with AWS

“AWS Solution Architects help us fast-track innovation by minimising our learning curve and iterating quickly. We also connect with multiple teams in AWS, including service teams and support teams to deep-dive into our use cases and build the right solution. For example, we connected with the Amazon SageMaker service team to get visibility into the SageMaker roadmap, which enhanced our confidence to build on AWS with a long-term vision,” Jithendra explained.

He added that they also have great internal talent who are masters in machine learning NLP and speech technologies; they build their own AI models and deploy them on Amazon SageMaker.

“We evaluated the Amazon SageMaker stack and found that their offering is the most mature and balanced solution in the industry. Amazon SageMaker provides complete access, control, and visibility in each step required to build, train, and deploy models. Furthermore, spot training in Amazon SageMaker has optimised the cost of training models while managing interruptions,” shared Jithendra.

The business impact

“Deployment of our models using Amazon SageMaker has saved us 50 percent of development effort for every deep learning model deployment. The adoption of AWS ML services has helped us to scale our services exponentially with minimal development effort; for example, the number of calls we process in a day increased by 10x in the last two years,” highlighted Jithendra.

The company is all set to become a one-stop-shop for support functions and is in the process of adding more channels like email, SMS, and social, to its product along with expanding its language capabilities.

“We want to bring AI/ML to every workflow, to every persona of the contact centre. AWS is a core part of our underlying platform. As we scale in the coming years, with the help of the AWS team, we want to drive efficiency as well as innovation in the cloud and ML space,” concluded Akash.

![Read more about the article [Funding alert] Zingbus raises Rs 44.6 Cr led by Infoedge ventures](https://blog.digitalsevaa.com/wp-content/uploads/2021/07/Imageyuw3-1616680107139-300x150.jpg)