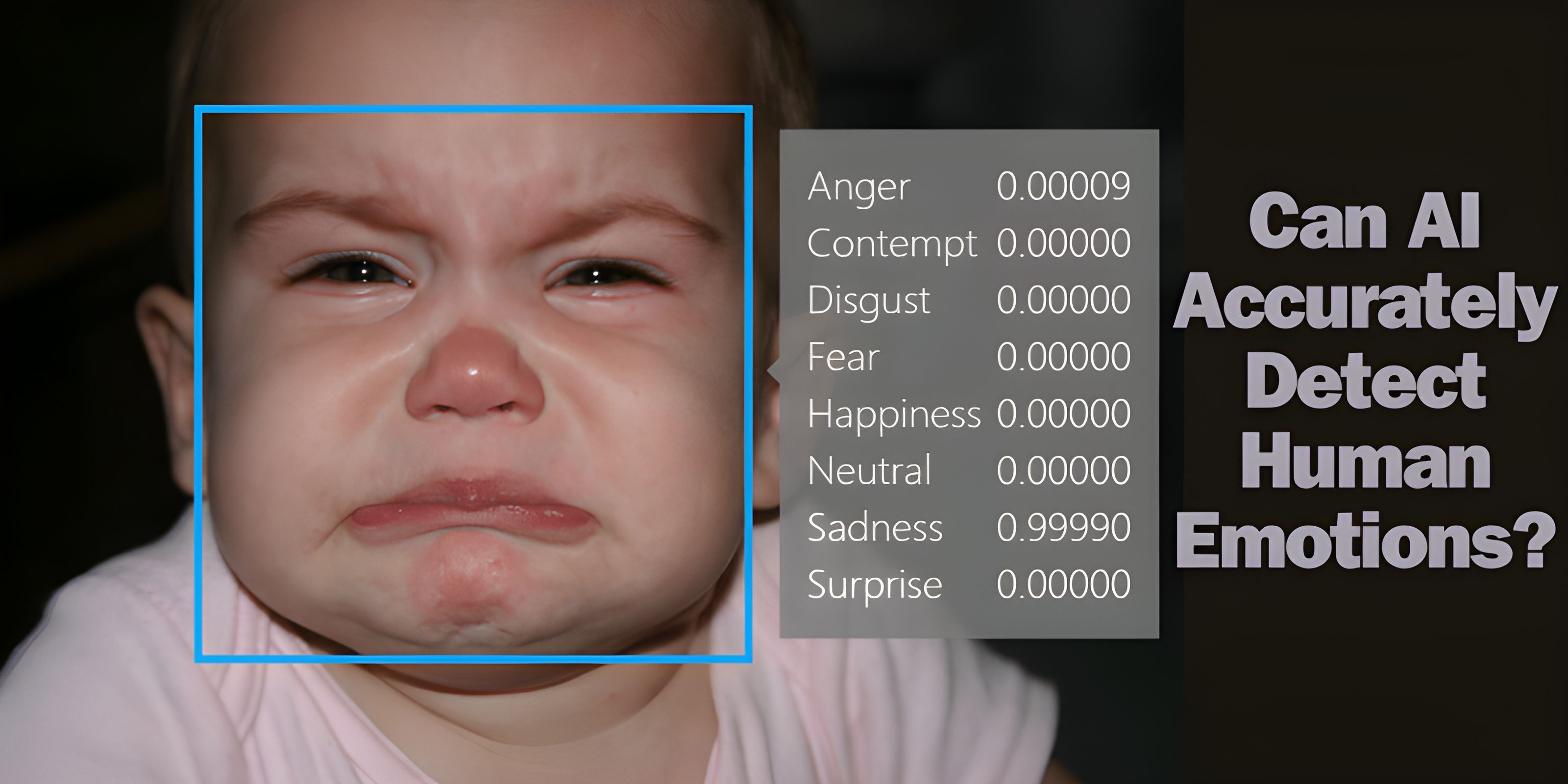

In recent years, significant strides have been made in the realm of affective computing, a field that focuses on embedding human emotions into technological systems. A notable advancement in this area is AffectAura, a sensory setup that continuously predicts the user’s emotional state. This tool captures and assesses emotions over time, enabling users to review and reflect on their emotional experiences.

AffectAura utilises an array of equipment, including webcams for facial expression recognition, Kinect sensors for posture analysis, microphones, electrodermal activity sensors, and GPS trackers. These tools, combined with machine learning algorithms, decipher affective signals related to valence (positive or negative emotions), arousal (emotional intensity), and engagement. The system’s visual representations use shapes to depict arousal and opacity for engagement, aiding users in recalling past events.

A key finding from the AffectAura study is that people generally have a better memory of recent interactions compared to older ones. Interestingly, there is a tendency to misremember events with a more positive emotional tone. This observation suggests that negative memories might fade more quickly than positive ones. The study also reveals that people often reconstruct past events based on affective cues, which can sometimes lead to the creation of false memories.

In a similar vein, another research project led by D’Mello et al. in 2007 explored an affect-sensing tutoring system, AutoTutor, designed to enhance learning in subjects like Newtonian physics. AutoTutor responds to the learner’s emotional states through a combination of video analysis of facial expressions, posture monitoring, and dialogue interaction. This affective loop is intended to adapt the tutoring based on the learner’s emotions, such as confusion or frustration.

One striking observation from the AutoTutor study was that students often displayed more emotional responses later in sessions, especially when faced with challenging questions or negative feedback. Posture analysis revealed that boredom was frequently associated with fidgeting, challenging the notion that it correlates with inactivity.

These studies underscore the potential of technology in recognising and responding to human emotions. By understanding and reacting to emotional states, technology can be tailored to enhance user experiences, whether in learning environments or in everyday interactions. The ongoing research in affective computing promises a future where technology empathises and adapts to our emotional needs, creating more intuitive and responsive user experiences.

.thumbnailWrapper

width:6.62rem !important;

.alsoReadTitleImage

min-width: 81px !important;

min-height: 81px !important;

.alsoReadMainTitleText

font-size: 14px !important;

line-height: 20px !important;

.alsoReadHeadText

font-size: 24px !important;

line-height: 20px !important;

![Read more about the article [Funding alert] Rocket Skills raises Rs 2.2 Cr in pre-seed round led by Better Capital, First Cheque, and Titan Capital](https://blog.digitalsevaa.com/wp-content/uploads/2021/08/Imagebv75-1628137688261-300x150.jpg)