In recent news, Microsoft experienced a big slip-up in securing important data. This event highlights growing worries about the safety of AI technology and the increasing need for stronger protection. Let’s explore this recent incident and its implications for the tech world.

A Huge Data Leak Comes to Light

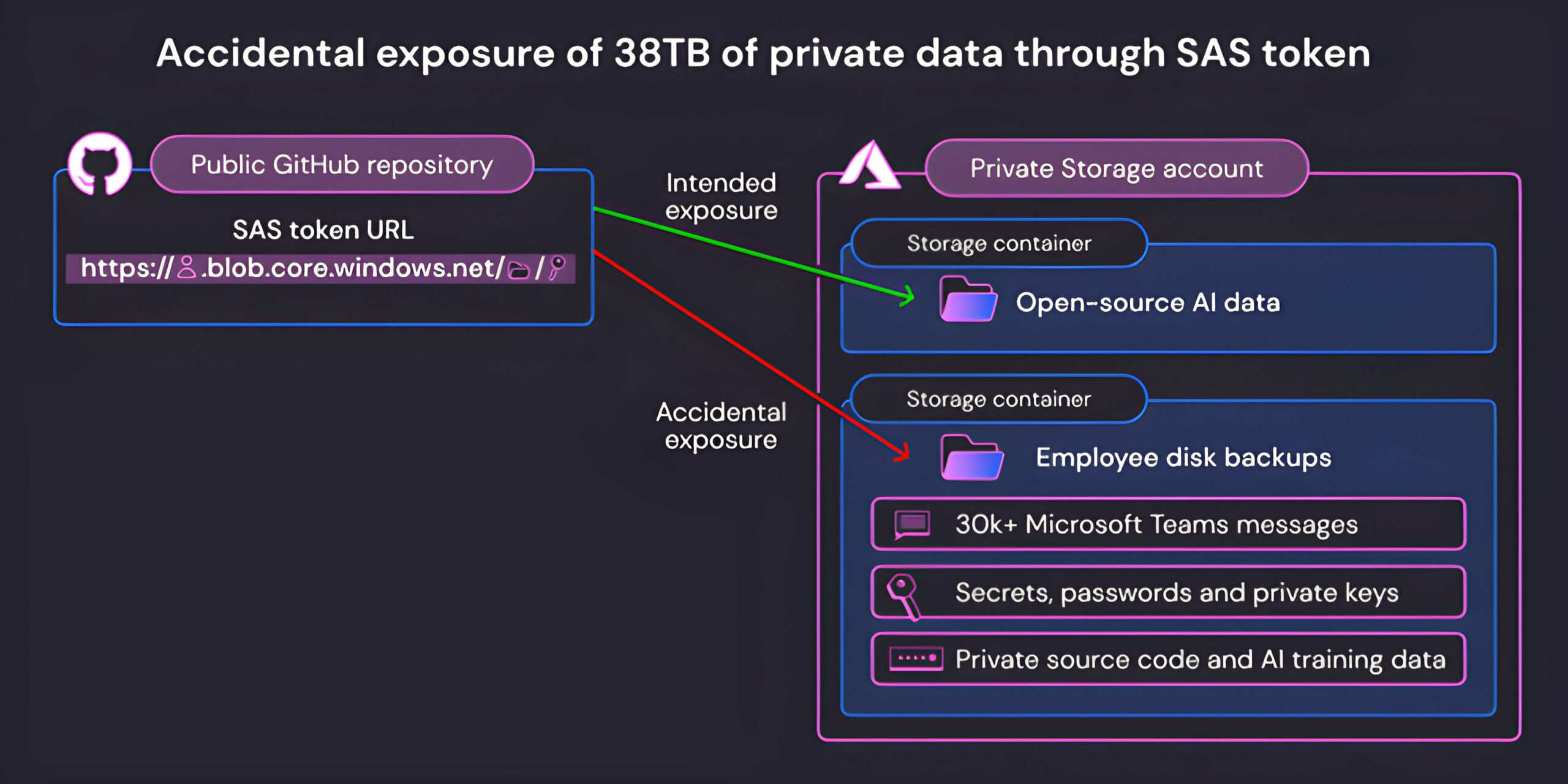

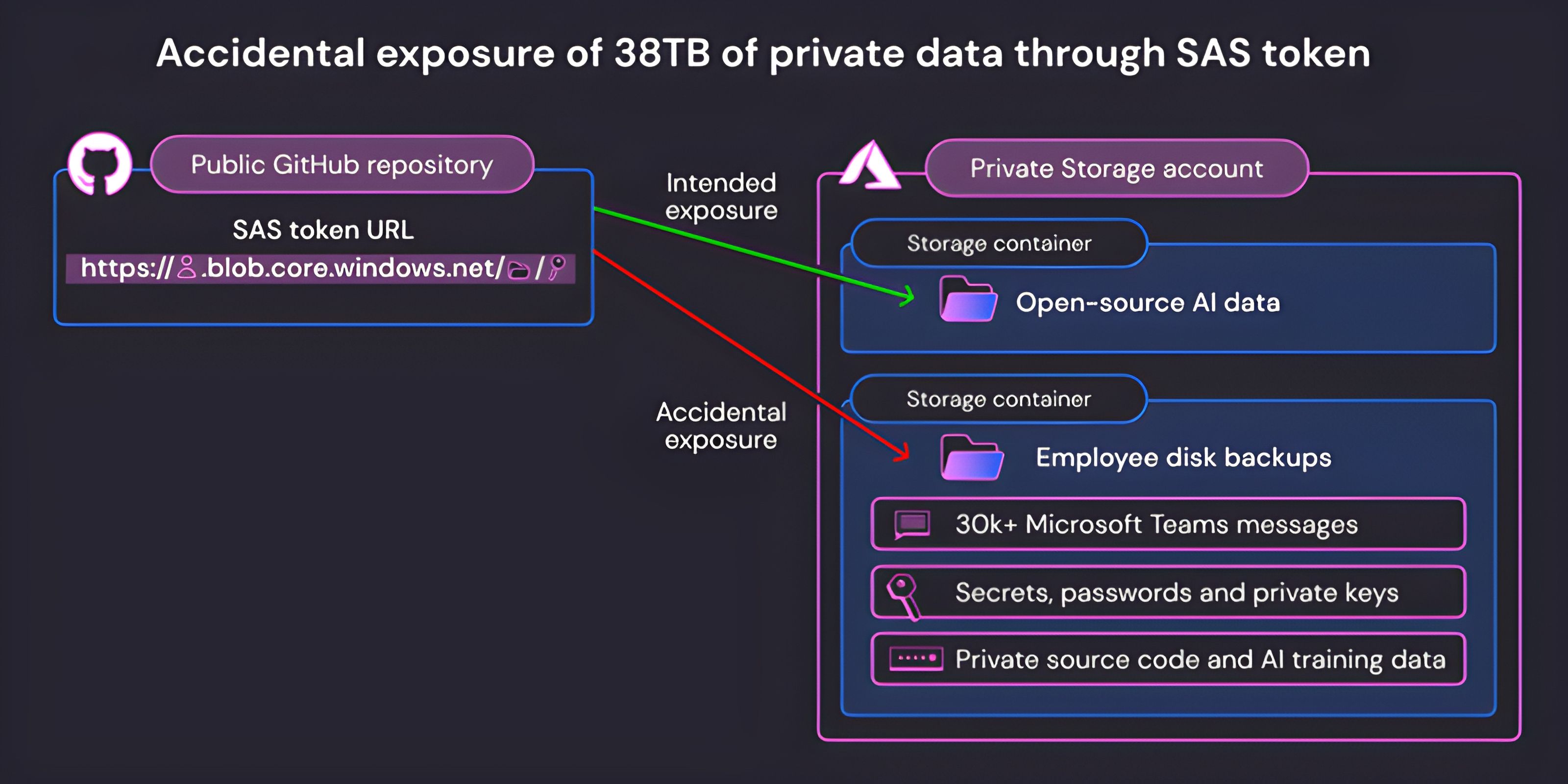

The cloud security company, Wiz, brought to light a significant mistake at Microsoft that took place over almost three years. This error, a result of misusing a tool on the Azure platform, led to a whopping 38 terabytes of private data being exposed to the public. This happened from July 20, 2020, to June 24, 2023, in a public GitHub space where Microsoft’s AI team worked.

This GitHub space, known as “robust-models-transfer,” used to house public code and AI designs for recognising images. It inadvertently showed sensitive info like employee workstation backups, secret codes, passwords, and a lot of internal chats from Microsoft Teams.

The Main Issue: Incorrect Use of SAS Tokens

At the heart of this mistake was the wrong use of Shared Access Signature (SAS) tokens, a tool vital for sharing data on Azure. These tokens are seen as a security weak point due to their decentralised management and monitoring on the Azure platform. Here, Microsoft’s team used a SAS token that was too open, accidentally allowing public access to a large quantity of data in a wrongly set up Azure storage area.

Despite their helpful features, these tokens can be a security risk as they can remain active for a very long time, making it hard to control their use effectively. The first token was active from July 2020 to October 2021, and another was set to last until 2051.

Addressing the Problem

After Wiz reported this issue, Microsoft quickly fixed the unsafe token and stopped outside access to the concerned storage area, reducing further risk. The company confirmed that customer data was not accessed without permission and no further steps were needed from the customers.

To avoid this in the future, Microsoft has improved its scanning services to identify any risky SAS tokens quickly. They also fixed a glitch in their system which wrongly identified the particular SAS URL as a non-issue before.

A Broader View: Strengthening AI Tech Security

This situation shines a light on the inherent dangers in AI technology development, which often involves sharing or using large data sets in public projects. With AI tech growing rapidly, Wiz advises that it’s getting harder to prevent and oversee such issues.

This event is a clear sign that the tech field needs to step up its safety game, especially when working on new AI innovations. Wiz’s CTO, Ami Luttwak, emphasises the need for careful checks and protective steps, urging companies to take strong actions to avoid such incidents in the future.

In a time when data is extremely valuable, this incident is a clear sign of the rising need for stronger safety practices in the evolving AI tech world. As big companies like Microsoft lead in AI developments, focusing on better data security is crucial. This incident should encourage a culture of carefulness and strong safety rules, ensuring that fast progress does not risk data safety.

.thumbnailWrapper

width:6.62rem !important;

.alsoReadTitleImage

min-width: 81px !important;

min-height: 81px !important;

.alsoReadMainTitleText

font-size: 14px !important;

line-height: 20px !important;

.alsoReadHeadText

font-size: 24px !important;

line-height: 20px !important;